ChatGPT actually gave some useful suggestions!

Thought I'd share them here.

Physical impacts can cause a range of issues, from visible damage to subtle problems that only manifest under specific conditions. Here's a systematic approach to surveying the motherboard for damage:

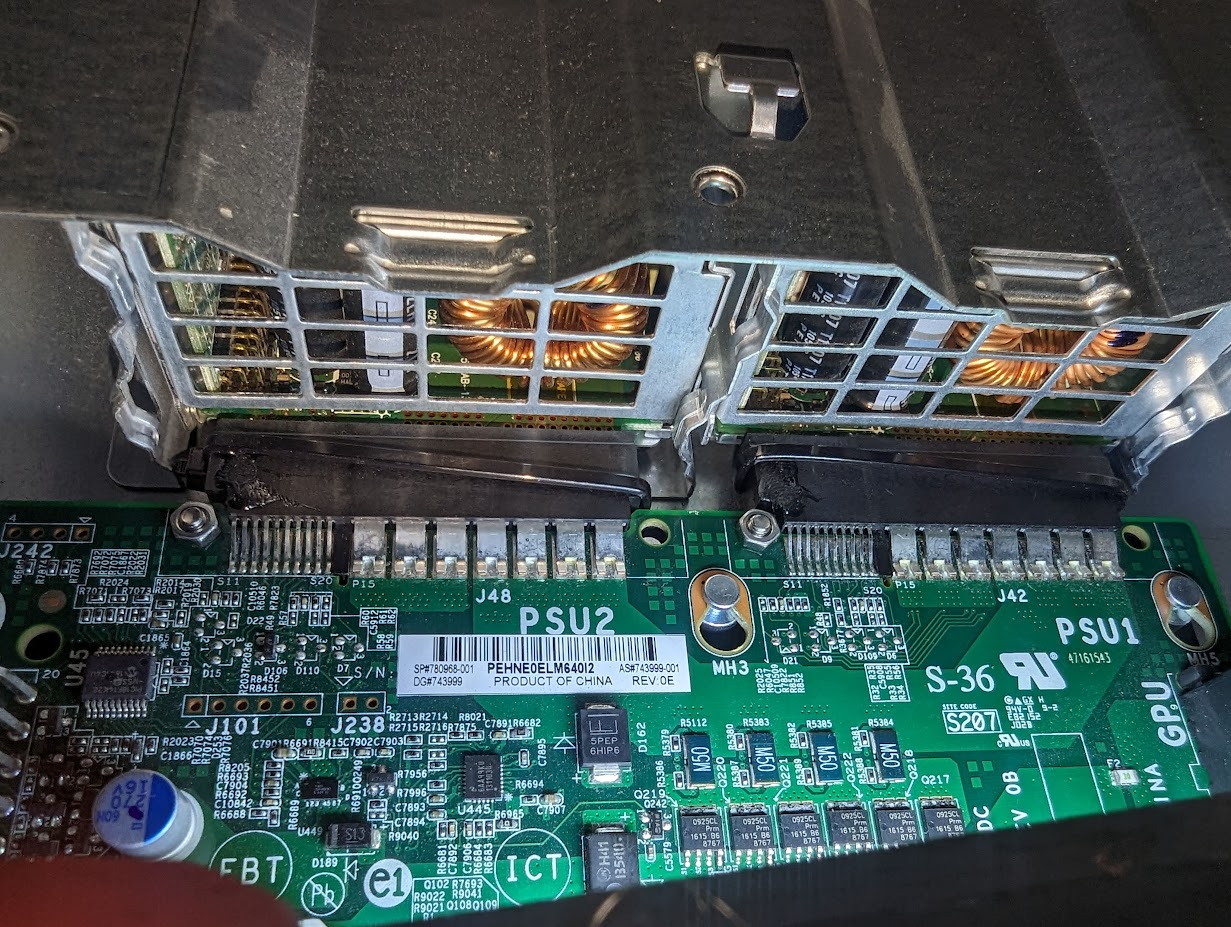

1. **Visual Inspection**:

- **Magnifying Glass or Loupe**: Use a magnifying glass or a loupe to inspect the motherboard closely. Look for any visible cracks, broken traces, or damaged components.

- **Check Connectors**: Ensure that all connectors, including the PCIe slots, are intact and not bent or broken.

- **Inspect Solder Joints**: Look for any solder joints that might have cracked or become loose due to the impact.

- **Check for Debris**: Ensure there's no debris or foreign objects lodged between components or traces.

2. **Physical Inspection**:

- **Press Gently**: Gently press down on various parts of the motherboard to see if there's any unusual movement, which could indicate a broken stand-off or mounting point.

- **Check the Back**: Turn the motherboard over and inspect the back for any signs of damage or unusual marks.

3. **Functional Testing**:

- **Power On**: If the visual and physical inspections don't reveal any issues, consider powering on the server. Listen for any unusual sounds and observe any irregular LED patterns.

- **BIOS/UEFI Check**: Access the BIOS/UEFI and check for any hardware errors or warnings. Some systems run a self-test during startup and might report issues.

- **PCIe Slot Test**: Since the impact dislodged a card from the PCIe slot, test that specific slot with a known working card to ensure it's still functional. Also, test the previously dislodged card in another slot or system to ensure the card itself isn't damaged.

- **Stress Testing**: Use software tools to stress-test the system. Tools like Prime95, Memtest86, and FurMark can help identify issues with the CPU, memory, and GPU, respectively.

4. **Check Peripheral Components**:

- **RAM**: Ensure that all RAM modules are seated correctly. Consider running a memory test to ensure they're functioning correctly.

- **Storage**: Check that all storage devices (HDDs, SSDs) are securely connected and are recognized by the system.

- **Cooling**: Ensure that all fans are operational and that heatsinks are securely attached.

5. **External Inspection**:

- **Case and Chassis**: Check the server case and chassis for any signs of deformation or damage. Ensure that the motherboard is securely and correctly mounted within the case.

6. **Seek Expert Assistance**:

- If you're unsure about any findings or if the server behaves erratically, consider seeking assistance from a professional or a specialized repair service. They might have specialized equipment, like multimeters or oscilloscopes, to diagnose issues more accurately.

7. **Document Everything**:

- Take photos of any visible damage or irregularities. This can be useful for insurance claims, future reference, or if you decide to seek professional assistance.

Remember, even if the server appears to function normally after the impact, there could be latent issues that might manifest later. It's essential to be thorough in your inspection and testing to ensure the server's reliability and longevity.

.jpg)

.jpg)

.jpg)